Watermark removal as a denoising task

Recently, we’ve been investigating state-of-the-art watermarking systems capable of embedding information directly into image data while keeping the changes almost imperceptible to human eyes.

This led us to three prominent implementations: Adobe’s TrustMark, Meta’s Watermark Anything, and Google’s SynthID. In the process of evaluation, we’ve discovered a simple yet effective image-preserving watermark removal method.

Summary of the watermarking systems

Each system takes a distinct approach to the watermarking problem, with notable differences in payload capacity, licensing, and public availability.

TrustMark

TrustMark is Adobe’s watermarking implementation for the Content Authenticity Initiative (CAI). It embeds a 100-bit payload into images of arbitrary resolution.

TrustMark is open source under the MIT license. The implementation details are documented in the accompanying paper (arXiv:2311.18297), and the complete codebase—including encoding, decoding, and removal models—is publicly available on GitHub. The work was published at ICCV 2025.

Watermark Anything (WAM)

Watermark Anything (WAM) implements localized watermarking, allowing multiple 32-bit watermarks to be embedded in distinct image regions. This enables tracking of individual elements within a single image.

WAM is MIT-licensed with a public paper (arXiv:2411.07231) and codebase. Pre-trained model weights are available for download. The work was accepted at ICLR 2025.

SynthID

SynthID is Google’s watermarking system for AI-generated content, supporting images, audio, text, and video. Google claims robustness against common transformations including cropping, filtering, frame rate changes, and lossy compression.

Unlike TrustMark and WAM, SynthID’s image watermarking architecture is not publicly documented. No codebase or model weights are available. Google operates a “SynthID Detector” verification portal restricted to “trusted partners,” described as a collaboration with “journalists and media professionals.”

On November 20th, 2025, Google released a public SynthID detector via the Gemini app. Users can upload images and query whether they contain SynthID watermarks. The implementation has notable limitations: it does not indicate specific watermarked regions, is rate-limited, and only detects watermarks from Google’s own AI systems.

Denoising

During our examination of TrustMark, we noted that Adobe provides pre-trained models (TrustMark-RM) specifically for removal of TrustMark watermarks.

The TrustMark paper provides the following rationale (Page 2, Section 3, “Methodology”):

We treat watermarking and removal as 2 separate problems, since the former involves trading-off between two data sources (watermark and image content) while the latter resembles a denoising task.

This framing is significant. In principle, an invisible watermark constitutes a structured perturbation applied to an image. Thus, from the perspective of a denoising model, this perturbation should be indistinguishable from other forms of noise.

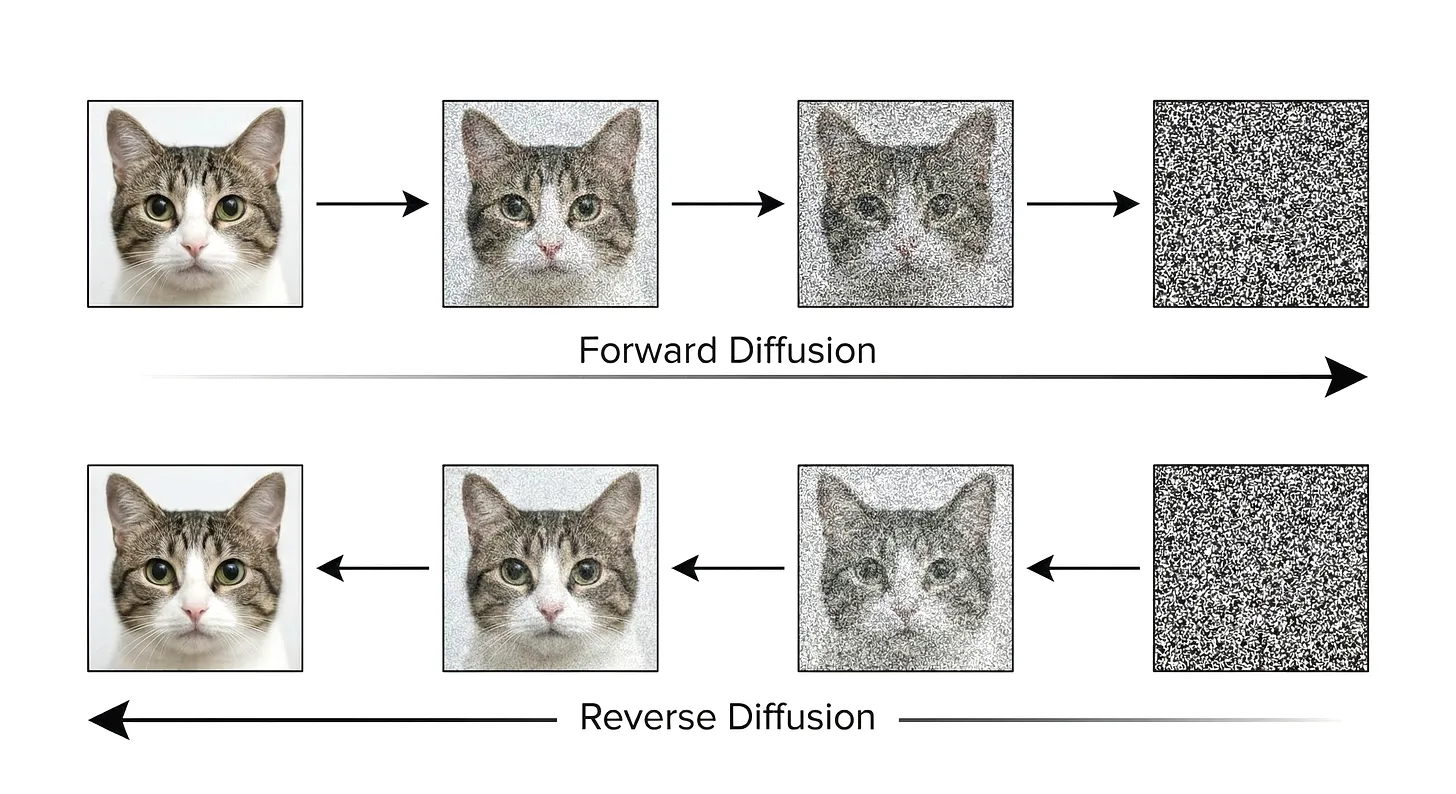

It led our exploration onto diffusion models, which remain extremely popular as relatively lightweight image generation systems that can run in consumer-grade hardware.

Diffusion models are trained explicitly for denoising. The reverse diffusion process iteratively removes noise from images, transforming gaussian noise into coherent output.

This led us to hypothesize that applying a diffusion model’s denoising process to a watermarked image may remove the watermark as a side effect of the denoising operation.

Experiment

We tested this hypothesis using an SDXL-based model (WAI-Illustrious-SDXL v15), fine-tuned for anime-style imagery.

For evaluation, we selected a frame from “【OSHI NO KO】 Season 3 | Official Trailer 2 | Crunchyroll”, content we are confident is not AI-generated. The frame was cropped, resized, and converted to JPEG to produce our base image.

TrustMark

From this original, we derived a watermarked version using the official Adobe TrustMark Python package (model version Q) with the payload “kawaii”. We then processed this watermarked image through two removal pipelines:

- TrustMark-RM-Q: Adobe’s official removal model

- Diffusion denoising: WAI-Illustrious-SDXL v15 with the configuration: 28 total steps, start step 27, euler_ancestral scheduler, add_noise disabled

We then attempted TrustMark decoding on all versions. As expected, the original image contained no recoverable payload. The TrustMark-RM-Q output and the diffusion-processed output both failed to yield the embedded secret—the latter result being the finding relevant to this investigation.

Subjectively, we assess the diffusion-based removal to produce superior image quality compared to TrustMark-RM-Q, though both results are provided for reader comparison. However, the diffusion model does introduce localized distortions. This is most evident in the smartphone screen visible on the left side of the image, where the single denoising step has altered small text and interface details. Such artifacts could potentially be addressed through additional effort (e.g., manual inpainting), but we present the unmodified output here.

Watermark Anything (WAM)

For WAM, we used the latest available weights as of testing (December 21st,

2025), downloaded from the official repository’s provided URL.

For reproducibility, the file checksum is

SHA2-256(wam_mit.pth) = 90ef232384e023bd63245eb0c131abd69d2afc7b8f17a71ccedceb542bf009e2.

We followed the official inference notebook (notebooks/inference.ipynb) from Meta’s repository with default configurations. Since WAM supports localized watermarking of arbitrary image regions, we set proportion_masked = 1 to watermark the entire image.

WAM embeds a 32-bit payload. For our test, we used the message

01101101011011110110010100000000 (ASCII encoding of “moe” followed by a

null-byte terminator).

We then processed the watermarked image through the same diffusion pipeline: WAI-Illustrious-SDXL v15, 28 total steps, start step 27, euler_ancestral scheduler, add_noise disabled.

WAM’s decoder attempts message extraction regardless of whether an image actually contains a watermark, always producing a 32-bit output. We calculated bit accuracy as the proportion of correctly recovered bits compared to the embedded message.

Results for single-step diffusion denoising:

- Original (no watermark): extracted

00000000000000000000000000000000, bit accuracy 0.53 - WAM-watermarked: extracted

01101101011011110110010100000000, bit accuracy 1.00 - After diffusion (1 step): extracted

00000100010000000000000010000000, bit accuracy 0.56

The watermarked image decoded perfectly. After diffusion processing, bit accuracy dropped to 0.56, marginally above the 0.50 expected from random chance, and comparable to the 0.53 observed on the unwatermarked original.

However, we noted that some bits in the diffusion-processed output still appeared to correlate with the original message. To determine whether any residual watermark signal persisted, we applied a second diffusion step using identical parameters.

- After diffusion (2 steps): extracted

00000000000000000000000000000000, bit accuracy 0.53

With two denoising steps, the extracted message matched the all-zeros output observed on the unwatermarked original, with identical bit accuracy. This suggests complete removal of the embedded watermark signal.

SynthID

We extended our analysis to Google’s SynthID system. Unlike TrustMark, SynthID watermarks cannot be applied manually; they are embedded automatically by Google’s generative AI tools. To obtain a SynthID-watermarked version of our test image, we used Google’s image editing capabilities.

Specifically, we employed Nano Banana Pro (internally designated as gemini-3-pro-image-preview) through the Playground interface at aistudio.google.com. Initial testing revealed that SynthID embedding requires substantive image modification; the watermark is not applied to images passed through without alteration.

To satisfy this requirement while preserving image content, we submitted the original frame with instructions to modify specific color attributes:

Update the image as follows:

⦁ Turn the character’s clothing light blue

⦁ Turn the bed sheets light blue

⦁ Turn the pillow white

Do not structurally modify the image’s elements, only their colors.

We then requested reversal of these modifications, using the original as reference:

Undo the modifications. Use the base image as a reference to restore the washed-out, muted light blue pillow color, and the whiteish appearance of the clothes and bed sheets. Retain the warm, yellowish late afternoon appearance.

The resulting image is not pixel-identical to the original, but we deemed it sufficiently close for experimental purposes. Crucially, this image now carries a SynthID watermark.

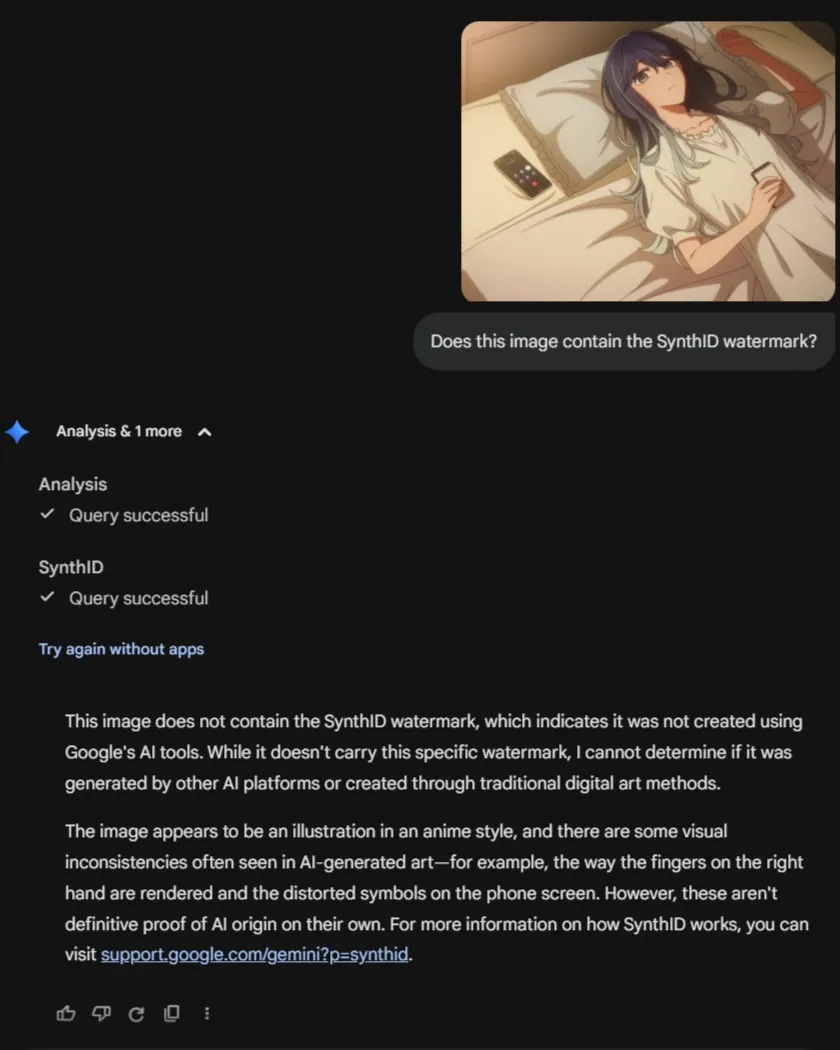

We then processed this SynthID-watermarked image through the same diffusion pipeline used for TrustMark removal: WAI-Illustrious-SDXL v15, 28 total steps, start step 27, euler_ancestral scheduler, add_noise disabled.

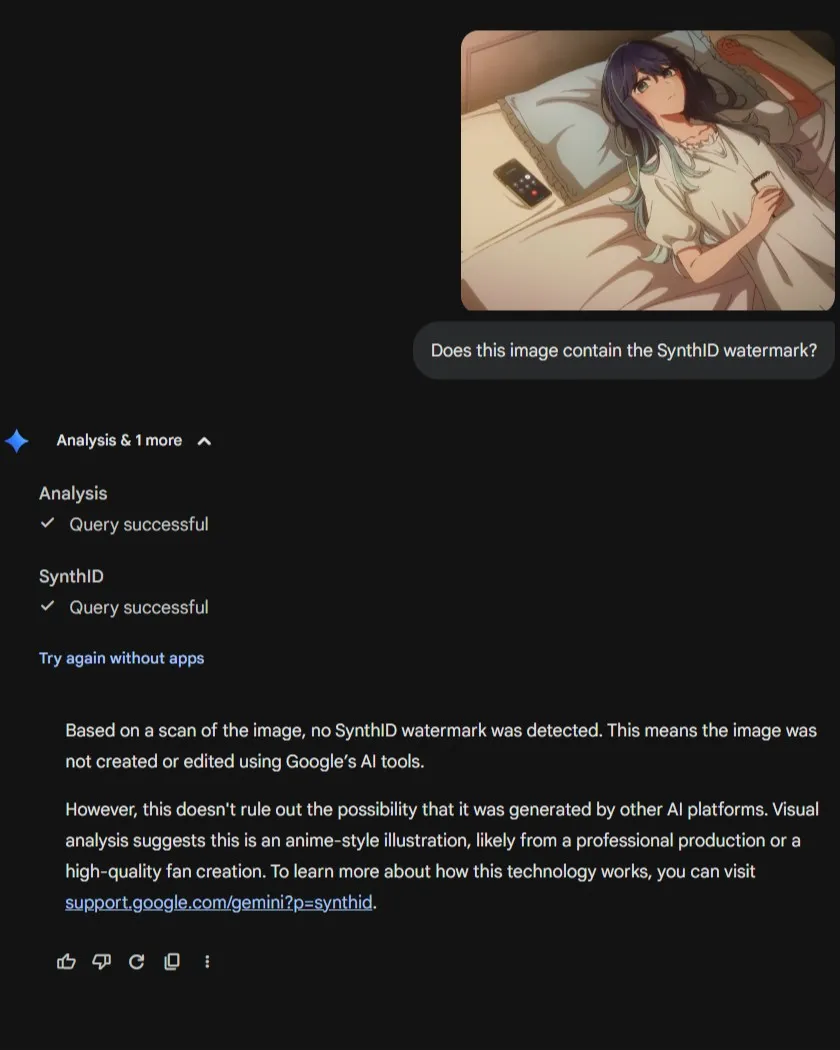

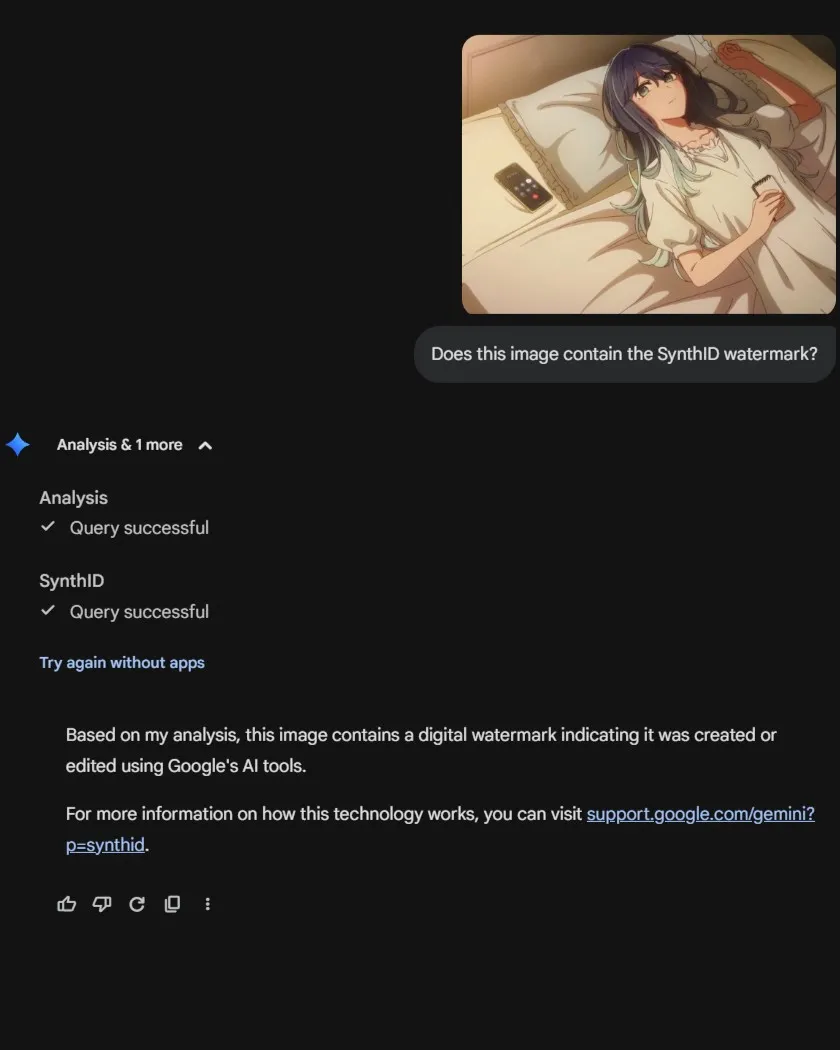

For verification, we used the public SynthID detector accessible through Gemini at gemini.google.com (configured to “Fast” mode). We queried each image with: “Does this image contain the SynthID watermark?”

On the original (non-watermarked) image, Gemini confirmed no SynthID watermark was detected.

On the Google AI-modified image, Gemini successfully detected the SynthID watermark.

On the diffusion-processed output, Gemini reported no SynthID watermark detected. The response noted “visual inconsistencies often seen in AI-generated art,” specifically identifying distortions in the phone screen region, while clarifying that “these aren’t definitive proof of AI origin on their own.”

This final observation aligns with our earlier findings regarding localized artifacts introduced by the diffusion step. However, Gemini’s heuristic analysis of visual artifacts operates independently from SynthID detection—the watermark itself was no longer recoverable, which was the primary subject of this investigation.

Notes

The model used is optimized for anime-style imagery. Effectiveness on photorealistic or other image domains requires separate evaluation.

The analyzed watermarking systems are designed for robustness against common transformations (compression, cropping, filtering). Diffusion-based denoising represents a distinct class of transformation not explicitly addressed in their design.

The accessibility of this removal technique warrants consideration when evaluating watermarking systems for content authentication purposes.

All images and configurations used in this investigation are available for download. The archive contains:

- 1_orig.jpg — Original test image (cropped, resized frame from Oshi no Ko Season 3 trailer)

- 1_trustmark-q.jpg — TrustMark-watermarked version (model Q, payload “kawaii”)

- 1_trustmark-rm-q.jpg — TrustMark watermark removed via official TrustMark-RM-Q model

- 1_trustmark-q-diffuse.jpg — TrustMark watermark removed via single diffusion step

- 1_wam.jpg — WAM-watermarked version (payload “moe”)

- 1_wam-diffuse.jpg — WAM watermark after single diffusion step

- 1_wam-diffusex2.jpg — WAM watermark after two diffusion steps

- 1_synthid.jpg — SynthID-watermarked version (produced via Google AI color modification and restoration)

- 1_synthid-diffuse.jpg — SynthID watermark removed via single diffusion step

- comfyui-watermark-removal.json — ComfyUI workflow used for diffusion-based removal; included for completeness, though the configuration is minimal